Astrophotography on the Pixel 4 was announced as one of the highlight features during its launch event. The sample photos shown by Google were impressive and once the phone released, social media starting filling up with incredible photos people clicked with their Pixel 4. In this article we explain how Google produces beautiful starry photos of the night sky with just a smartphone camera.

Taking starry photos of the night sky has been possible with traditional DSLR’s for many ears. But they often require heavy gear, patience and technical knowledge to get good results. With the power of computational photography, Google has managed to let users click amazing photos of the night sky with just a Pixel 4. Pixel 3 users can also use this feature but with less impressive results.

To begin with, to get a brighter well-lit photo, you simply need more light. Generally, a bigger aperture allows more light to enter the sensor, giving a brighter photo. But smartphones have very small lenses so that becomes difficult. To circumvent this, Google simply exposes the sensor for longer time to collect more light.

This technique comes with its own set of problems. The major ones being movement in the photo and blur in the photo created by the user’s hand shaking. Google’s solution for this is to break the long exposure into multiple smaller exposure and combine them in post to get a photo that is well lit. Another problem that this solution solves is the noise data that is inherently present in photos when sensors are exposed for long periods.

Through experimentation and considering user patience levels, Google decided that it would allow upto 16 second long exposure per frame and upto 15 photos for one composite final photo, for a total possible time of 4 minutes. The frames are carefully aligned, keeping stars as single point object and not trails and the noise level in the photo are averaged out and reduced.

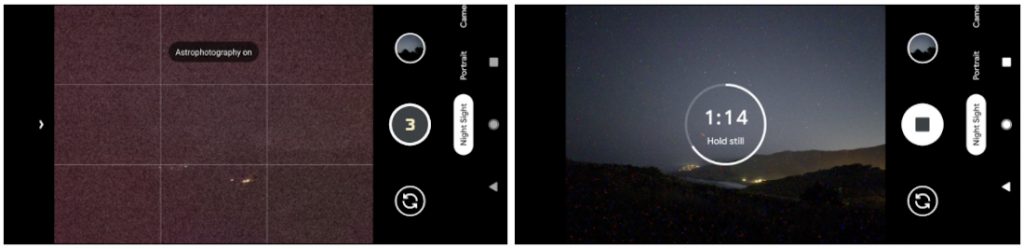

To help users frame their photo and decide focus points, Google made several updates to the live viewfinder too. The first being a “post-shutter viewfinder”. Users can begin exposure to the sensor and Google will update the screen (viewfinder) with the updated composite photo, with each individual photo lasting upto 16 seconds. Once the user is satisfied with the framing, the user can click a second photo with the correct frame.

Autofocus is dealt with two separate initial shots of one seconds each which is enough time for the phone to decide the focus points. Google has also thought about sky’s being unnaturally bright when taking Astrophotography. Using AI, The phone will selectively darken only the sky, to make the sky appear more naturally lit with respect to the landscape (shown in the above photo where the right side shows the image with sky selectively darkened).

Along with a few other features, Google has created a piece of software that can produce photos, when it works correctly, that are nothing short of brilliant. To learn about astrophotography in more detail, check out this blog post by Google AI team.