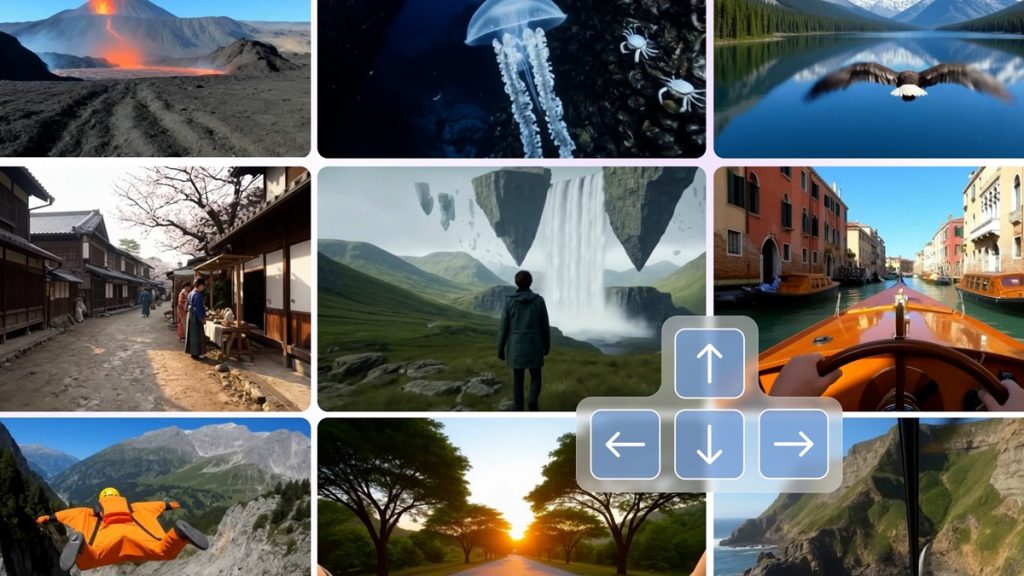

Google DeepMind has announced Genie 3, an advanced world model capable of generating interactive 3D environments from just a single image. The model, trained without supervision or environment labels, allows users to control the character in a simulated world derived from the input image.

Training Genie 3 with Unsupervised Learning

Genie 3 is designed as a world model that predicts future frames, rewards, and actions based on video data. Unlike previous models, Genie learns in an unsupervised way—trained purely on internet videos and associated actions, without labeled environments or supervision.

It can generalize to new visual inputs, generating interactive, controllable environments without fine-tuning. The training set includes 30 million video clips paired with action traces, making it one of the largest unsupervised datasets for world modeling to date.

From Image to Playable Game

Users provide a single image—either drawn, rendered, or real-world. Genie 3 then:

- Extracts the spatial layout from the image,

- Uses its latent action model to understand possible movements,

- Renders a dynamic, controllable 3D environment where the user can interact as if playing a side-scrolling video game.

According to DeepMind, the model supports motion and interaction via a built-in latent action representation that handles character movement based on user inputs. This allows the system to simulate physics, character control, and environment responses in real time.

Model Architecture

Genie 3’s pipeline includes:

- Spatiotemporal video tokenizer: Converts video frames into discrete tokens for efficient learning.

- Latent action model: Learns a compressed representation of actions from video-action pairs.

- Dynamics model: Predicts the next frames and states.

- Renderer: Converts learned tokens back into realistic 3D-like frames.

All components were trained end-to-end, using data from open internet sources, without any game engine involvement.

Limitations

DeepMind notes that Genie 3:

- Is limited to 2D side-scrolling environments,

- Can only simulate characters with fixed movement directions (left/right),

- Doesn’t support long-term memory or high-level planning,

- May produce low visual quality on out-of-distribution (unseen) inputs.

It’s an early prototype, meant for exploring controllable environment learning from passive video.

Availability

Genie 3 is currently available as a limited research preview via a public web demo. DeepMind says that it may expand access in the future based on feedback and safety evaluations.