As a parent, there is always a worry of their children being taken advantage of online when it comes to sexually inappropriate content or being sent sexually explicit content via messages. To help prevent this unwanted exposure, Apple is introducing a number of new features across iOS, iPadOS and macOS.

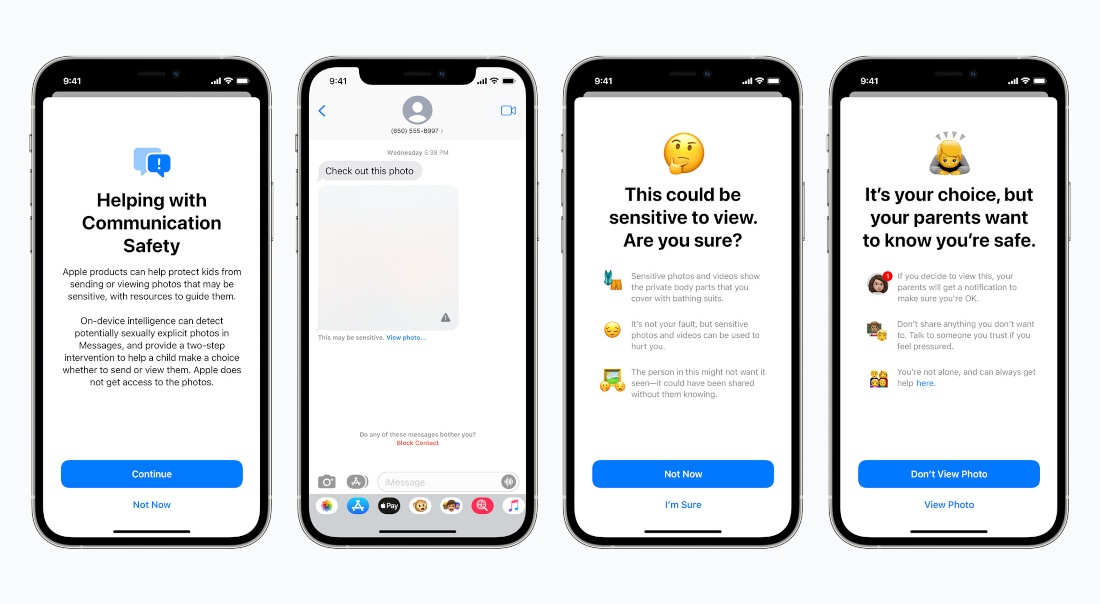

The first feature is for iMessage, where the app will use on-device machine learning to analyze image attachments and determine if a photo is sexually explicit. If a received photo is detected to be explicit, iMessage will blur the photo and the child will be warned, presented with helpful resources, and reassured it is okay if they do not want to view this photo. If they do choose to view it, their parents will get a message.

Along with receiving content, iMessage will also protect children from sending sexually explicit photos and parents can receive a message if the child chooses to send it.

The second update is for detecting Child Sexual Abuse Material (CSAM) online. Apple will use a database of known CSAM image hashes provided by NCMEC (National Center for Missing and Exploited Children) and other child safety organizations and compare images before it is stored in iCloud Photos. If an account crosses a threshold of known CSAM content, Apple will then manually review each report. If the report is found to be accurate, Apple will disable the user’s account, and send a report to NCMEC.

The last update is for Siri and Search, which can now intervene when users perform searches for queries related to CSAM, and provide additional resources to help children and parents stay safe online.

These features are coming later this year in updates to iOS 15, iPadOS 15, watchOS 8, and macOS Monterey.