Google has introduced three fundamental shifts including a range of new features that use AI to make your search experience more visual. The company also announced that it is making the Google Images experience even more useful and powerful.

Google earlier this year worked with AMP Project to announce AMP stories, an open source library that makes it easy for anyone to create a story on the open web. Now Google announced that it will use AI to intelligently construct AMP stories and surface this content in Search. It is starting the stories about notable people like celebrities and athletes—providing a glimpse into facts and important moments from their lives in a rich, visual format. This format lets you discover content from the web.

Using computer vision, Google is now able to deeply understand the content of a video and help you quickly find the most useful information in a new experience called featured videos. With featured videos, Google is aiming to deeply understand the topic space and show the most relevant videos for those subtopics.

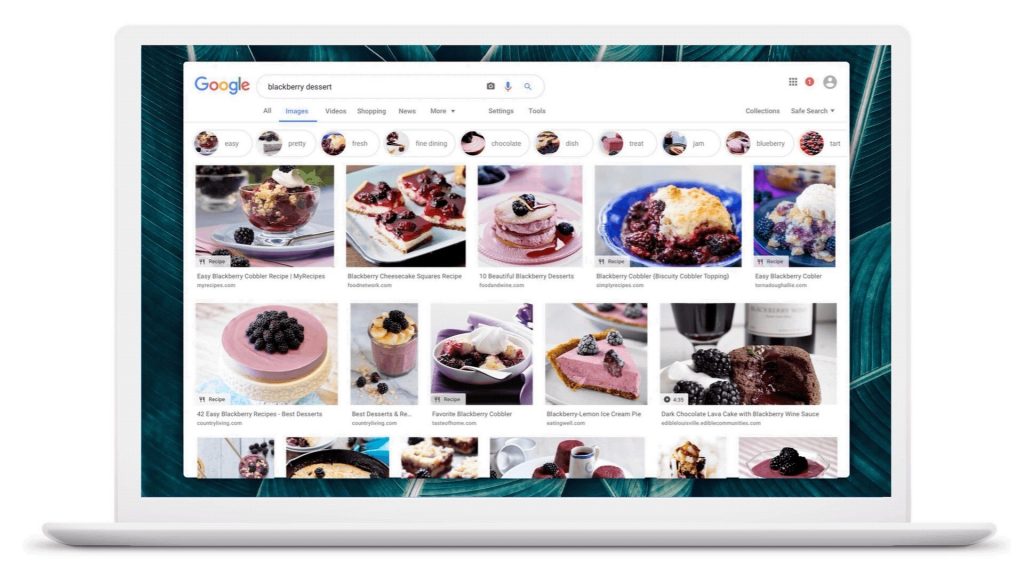

Google is also introducing new features to help you find visual information more easily. It is now prioritizing sites where the image is central to the page, and higher up on the page. Starting this week, Google will show more context around images, including captions that show you the title of the web page where each image is published. It will also suggest related search terms at the top of the page for more guidance.

In the coming weeks, Google will bring Lens to Google Images to help you explore and learn more about the visual content you find during your searches. Lens will also let you “draw” on any part of an image, even if it’s not pre-selected by Lens, to trigger related results and dive even deeper on what’s in your image.