Google has released Android 11 Developer Preview ahead of the final release later this year so that developers can test it, this time bit early. This brings new features to help users manage access to sensitive data and files, and Google says it has hardened critical areas of the platform to keep the OS resilient and secure. For developers, Android 11 brings several features including enhancements for foldables and 5G, call-screening APIs, new media and camera capabilities, machine learning, and more.

The Android 11 Developer Preview is available for Pixel 2 / 2 XL, Pixel 3 / 3 XL, Pixel 3a / 3a XL, or Pixel 4 / 4 XL on the Android developer website here. Developer Preview 2 will be available in March, Developer Preview 3 in April, Beta 1 in May during Google I/O, Beta 2 in June (with final APIs and official SDK), Beta 3 and the final stable release at the end of Q3 2020.

New features in Android 11

- 5G – Dynamic meteredness API – with this API you can check whether the connection is unmetered, and if so, offer higher resolution or quality that may use more data. Extended to include cellular networks, so that you can identify users whose carriers are offering truly unmetered data while connected to the carrier’s 5G network.

- 5G – Bandwidth estimator API – Makes it easier to check the downstream/upstream bandwidth, without needing to poll the network or compute your own estimate. If the modem doesn’t provide support, it makes a default estimation based on the current connection.

- New screen types – Pinhole and waterfall screens – Apps can manage pinhole screens and waterfall screens using the existing display cutout APIs.

- Dedicated conversations section in the notification shade – users can instantly find their ongoing conversations with people in their favorite apps.

- Bubbles – Bubbles are a way to keep conversations in view and accessible while multi-tasking on their phones. Messaging and chat apps should use the Bubbles API on notifications to enable this in Android 11.

- Insert images into notification replies – if your app supports image copy/paste, you can now let users insert assets directly into notification inline replies to enable richer communication as well as in the app itself.

- Neural Networks API 1.3 – Quality of Service APIs support priority and timeout for model execution, Memory Domain APIs reduce memory copying and transformation for consecutive model execution, Expanded quantization support, signed integer asymmetric quantization where signed integers are used in place of float numbers to enable smaller models and faster inference.

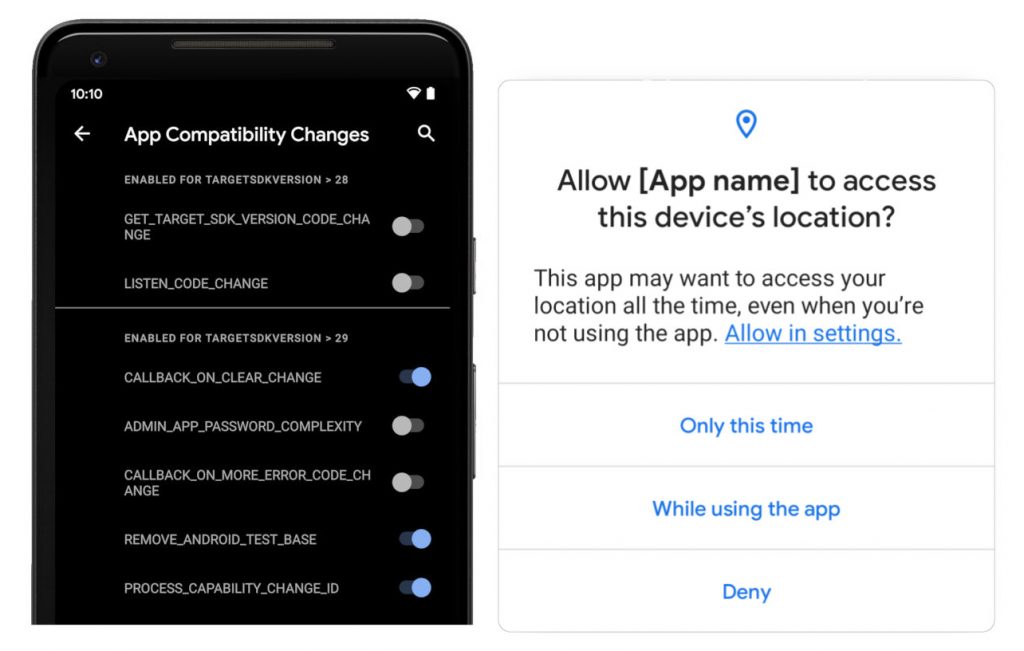

- One-time permission – For the most sensitive types of data – not just location but also for the device microphone and camera – users can now grant temporary access through a one-time permission. This permission means that apps can access the data until the user moves away from the app, and they must then request permission again for the next access.

- Scoped storage – Several enhancements, such as opt-in raw file path access for media, updated DocumentsUI, and batch edit operations in MediaStore. Along with these technical changes, based on your input, more time to make the migration and the changes will apply to your apps when they target Android 11.

- Biometrics – BiometricPrompt now supports three authenticator types with different levels of granularity — strong, weak, and device credential. Decoupled the BiometricPrompt flow from the app’s Activity lifecycle to make it easier to integrate with various app architectures, and to improve the transaction UI. All apps using biometric auth should move to the BiometricPrompt APIs, which are also available in AndroidX for compatibility with earlier versions of Android.

- Platform hardening – Expanded use of compiler-based sanitizers in security-critical components, including BoundSan, IntSan, CFI, and Shadow-Call Stack. Heap pointer tagging for apps targeting Android 11 or higher, to help apps catch memory issues in production. HWAsan to find and fix many memory errors in the system, and we now offer HWAsan-enabled system images to help you find such issues in your apps.

- Secure storage and sharing of data – Apps can now share data blobs easily and more safely with other apps through a BlobstoreManager. The Blob store is ideal for use-cases like sharing ML models among multiple apps for the same user.

- Identity credentials – Android 11 adds platform support for secure storage and retrieval of verifiable identification documents, such as ISO 18013-5 compliant Mobile Driving Licenses. We’ll have more details to share on this soon!

- Minimizing the impact of behavior changes – Minimize behavioral changes that could affect apps by closely reviewing their impact and by making them opt-in, wherever possible, until you set targetSdkVersion to ‘R’ in your app.

- Easier testing and debugging – Many of the breaking changes are toggleable – meaning that you can force-enable or disable the changes individually from Developer options or adb. With this change, there’s no longer a need to change targetSdkVersion or recompile your app for basic testing. Check out the details here.

- Updated greylists – Lists of restricted non-SDK interfaces are updated

- Dynamic resource loader – As part of their migration away from non-SDK interfaces, developers asked us for a public API to load resources and assets dynamically at runtime. Added a Resource Loader framework in Android 11

- New platform stability milestone – Android 11 gets new release milestone called “Platform Stability”, which is expected in early June. This milestone includes not only final SDK/NDK APIs, but also final internal APIs and system behaviors that may affect apps.

- Call screening service improvements – Call-screening apps can now do more to help users. Apps can get the incoming call’s STIR/SHAKEN verification status as part of the call details, and they can customize a system-provided post call screen to let users perform actions such as marking a call as spam or adding to contacts.

- Wi-Fi suggestion API enhancements – Extended Wi-Fi suggestion API to give connectivity management apps greater ability to manage their own networks. For example, they can force a disconnection by removing a network suggestion, manage Passpoint networks, receive more information about the quality of connected networks, and other management changes.

- Passpoint enhancements – Android now enforces and notifies about expiration date of a Passpoint profile, supports Common Name specification in the profile, and allows self-signed private CAs for Passpoint R1 profiles. Connectivity apps can now use the Wi-Fi suggestion API to manage Passpoint networks.

- HEIF animated drawables – The ImageDecoder API now lets you decode and render image sequence animations stored in HEIF files, so you can make use of high-quality assets while minimizing impact on network data and apk size. HEIF image sequences can offer drastic file-size reductions for image sequences when compared to animated GIFs. Developers can display HEIF image sequences in their apps by calling decodeDrawable with an HEIF source. If the source contains a sequence of images an AnimatedImageDrawable is returned.

- Native image decoder – New NDK APIs let apps decode and encode images (such as JPEG, PNG, WebP) from native code for graphics or post processing, while retaining a smaller APK size since you don’t need to bundle an external library. The native decoder also takes advantage of Android’s process for ongoing platform security updates. See the NDK sample code for examples.

- Muting during camera capture – Apps can use new APIs to mute vibration from ringtones, alarms or notifications while the session is active.

- Bokeh modes – Apps can use metadata tags to enable bokeh modes on camera capture requests in devices that support it. A still image mode offers highest quality capture, while a continuous mode ensures that capture keeps up with sensor output, such as for video capture.

- Low-latency video decoding in MediaCodec — Low latency video is critical for real-time video streaming apps and services like Stadia. Video codecs that support low latency playback return the first frame of the stream as quickly as possible after decoding starts. Apps can use new APIs to check and configure low-latency playback for a specific codec.

- HDMI low-latency mode – Apps can use new APIs to check for and request auto low latency mode (also known as game mode) on external displays and TVs. In this mode, the display or TV disables graphics post-processing in order to minimize latency.