Google has announced that it has open sourced its image captioning tool dubbed as Show and Tell in TensorFlow. The company has improved the technology and it is much faster to train, and produce more detailed and accurate descriptions compared to the original system.

Google says the ImageNet classification task now achieves 93.9% accuracy which is a significant increase from 89.6% from just two years ago. The company had to to train both the vision and language frameworks with captions created by real people. Google first published a paper on the model in 2014 and released an update in 2015 to document a newer and more accurate version of the model.

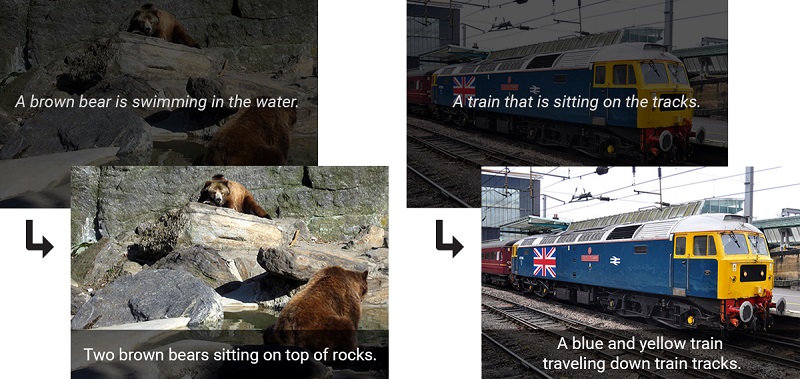

Excitingly, our model does indeed develop the ability to generate accurate new captions when presented with completely new scenes, indicating a deeper understanding of the objects and context in the images. Moreover, it learns how to express that knowledge in natural-sounding English phrases despite receiving no additional language training other than reading the human captions.

The new improvements apply recent advances in computer vision and machine translation to image-captioning challenges.