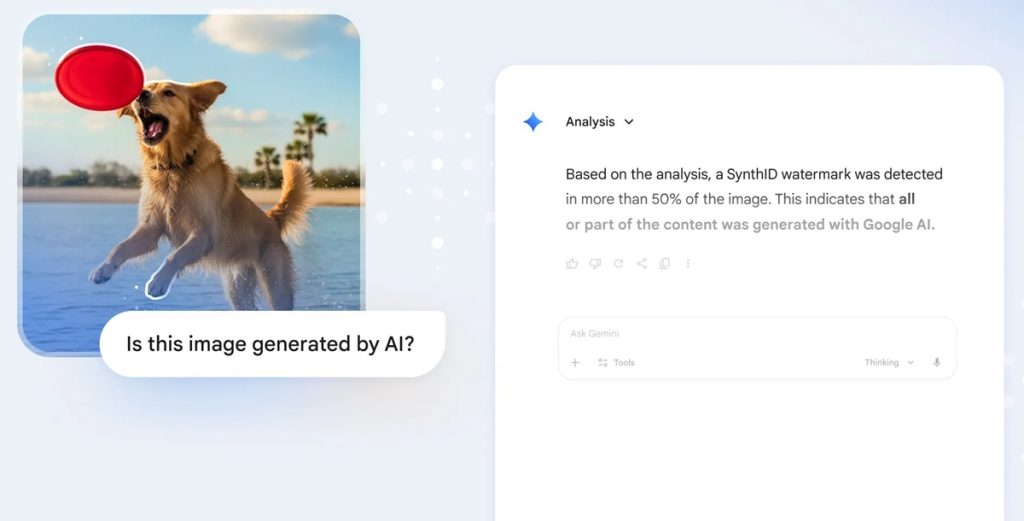

Google is expanding its tools for identifying AI-generated content by bringing SynthID-based image verification directly to the Gemini app. The update is designed to give users clear context about whether an image was created or edited using Google’s AI models.

SynthID verification in Gemini

Users can upload an image into the Gemini app and ask questions such as “Was this created with Google AI?” or “Is this AI-generated?” Gemini will then analyze the image, check for the SynthID watermark, and provide contextual information based on the findings.

SynthID, introduced in 2023, embeds imperceptible signals into AI-generated or AI-edited content. According to Pushmeet Kohli, VP of Science and Strategic Initiatives at Google DeepMind, more than 20 billion AI-generated items have been watermarked with SynthID to date. Google has also been testing the SynthID Detector portal with journalists and media professionals.

How the verification works

- Upload an image into the Gemini app

- Ask whether it was created using Google AI

- Gemini checks for a SynthID watermark

- The system returns information about the content’s possible origin

Upcoming expansions

Google states that this rollout builds on ongoing work to provide more context for images in Search and on research initiatives such as Backstory from Google DeepMind. The company plans to extend SynthID verification to additional formats including video and audio, and bring it to more Google surfaces like Search.

C2PA metadata integration

Google is also collaborating with industry partners through the Coalition for Content Provenance and Authenticity (C2PA). Beginning this week, images generated by Nano Banana Pro (Gemini 3 Pro Image) in the Gemini app, Vertex AI and Google Ads will include C2PA metadata.

Google says this capability will expand to more products and surfaces in the coming months. Over time, the company will extend verification to support C2PA content credentials, allowing users to check the origins of content produced by models outside of Google’s ecosystem.

Speaking on the development, Laurie Richardson, Vice President of Trust and Safety at Google, said:

This work remains central to our commitment to building bold and responsible AI. We look forward to further contributing to the future of AI transparency.