Recently, NVIDIA founder and CEO Jensen Huang, from his kitchen delivered a keynote about various NVIDIA products and discussed his vision of next-generation computing.

According to Huang, the original plans for the keynote to be delivered live at NVIDIA’s GPU Technology Conference in late March in San Jose were upended by the coronavirus pandemic.

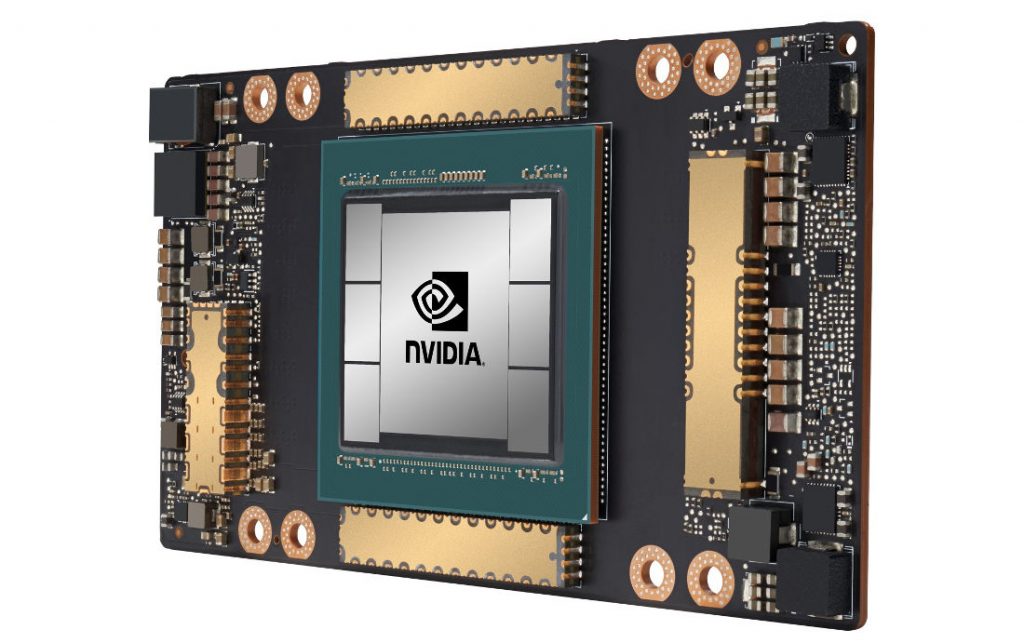

NVIDIA Ampere architecture

NVIDIA A100 is the first GPU based on the NVIDIA Ampere architecture providing the greatest generational performance leap of NVIDIA’s eight generations of GPUs. It is built for data analytics, scientific computing, and cloud graphics.

According to Huang, eighteen of the world’s leading service providers and systems builders are incorporating them – Alibaba Cloud, Amazon Web Services, Baidu Cloud, Cisco, Dell Technologies, Google Cloud, Hewlett Packard Enterprise, Microsoft Azure and Oracle.

The A100, and the NVIDIA Ampere architecture it’s built on, boost performance by up to 20x over its predecessors. Furthermore, the A100 has more than 54 billion transistors, making it the world’s largest 7-nanometer processor. Other features including Third-generation Tensor Cores with TF32, Structural sparsity acceleration, Multi-instance GPU, or MIG, Third-generation NVLink technology.

With this equipped, the company promises 6x higher performance than NVIDIA’s previous generation Volta architecture for training and 7x higher performance for inference.

NVIDIA DGX A100 with 5 petaflops of performance

NVIDIA is also shipping the third generation of its NVIDIA DGX AI system based on NVIDIA A100 — the NVIDIA DGX A100 — the world’s first 5-petaflops server. And each DGX A100 can be divided into as many as 56 applications, all running independently.

This allows a single server to either “scale up” to race through computationally intensive tasks such as AI training, or “scale-out,” for AI deployment, or inference. A100 will also be available for cloud and partner server makers as HGX A100.

Notably, a data center powered by five DGX A100 systems for AI training and inference running on just 28 kilowatts of power costing $1 million can do the work of a typical data center with 50 DGX-1 systems for AI training and 600 CPU systems consuming 630 kilowatts and costing over $11 million.

DGX SuperPOD

Additionally, NVIDIA also announced the next-generation DGX SuperPOD. Powered by 140 DGX A100 systems and Mellanox networking technology, it offers 700 petaflops of AI performance, the equivalent of one of the 20 fastest computers in the world.

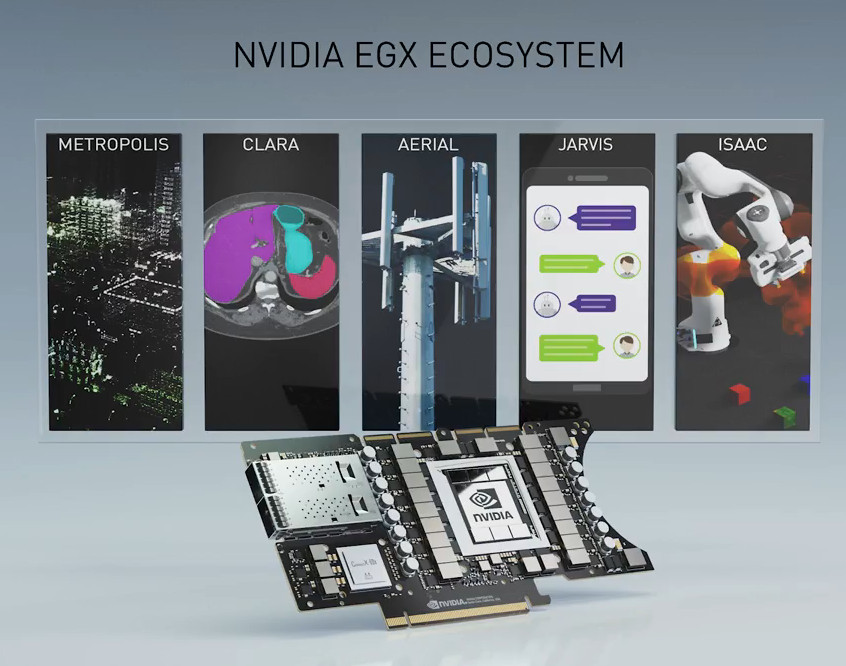

NVIDIA EGX A100

The CEO also announced NVIDIA EGX A100, bringing powerful real-time cloud-computing capabilities to the edge. It’s NVIDIA Ampere architecture GPU offers third-generation Tensor Cores and new security features. Also, it includes secure, lightning-fast networking capabilities.