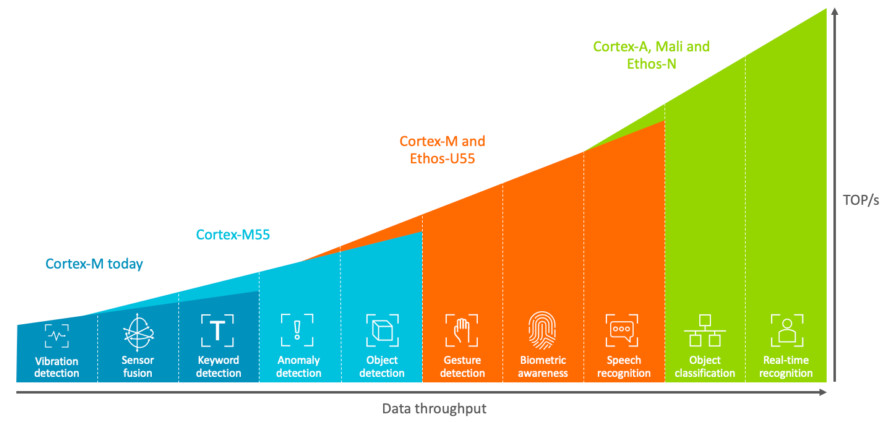

ARM has announced Cortex-M55 processor and Arm Ethos-U55 NPU, which the company says it the industry’s first microNPU (Neural Processing Unit) for Cortex-M, designed to deliver a combined 480x leap in ML performance to microcontrollers.

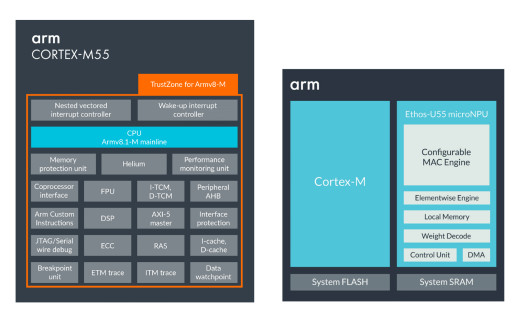

Features of Arm Cortex-M55 Processor

- Enhanced endpoint AI performance: The first processor based on Arm Helium technology with the highest, most efficient ML and DSP performance for Cortex-M

- Flexibility to differentiate: Arm Custom Instructions extends

the processor’s capabilities for workload specific optimization - Faster time to market: The Corstone-300 reference design offers

the fastest, most secure way to incorporate the Cortex-M55 into an SoC - Simplified software development: Integrated into a single developer tool chain supported by a broad ecosystem of software, tools, libraries and resources

- Arm Helium Technology: Helium is a new vector instruction set extension in the Armv8.1-M architecture that enables a significant uplift in DSP and ML capabilities. The vector processing extension adds over 150 new scalar and vector instructions, enabling the efficient compute of 8-bit, 16-bit,

and 32-bit fixed point data. 16-bit and 32-bit fixed point formats are used in traditional signal processing applications, such as audio processing. - Floating-point Unit: The Cortex-M55 Floating-point Unit (FPU) natively supports vector and scalar half-precision and single-precision floating-point datatypes. Additionally, the FPU provides native support for scalar double-precision floating-point calculations.

- Security: Arm TrustZone technology is supported in the Cortex-M55 processor, reducing the potential for software-based attacks by isolating the critical information from the rest of the application.

Features of Arm Ethos-U55 microNPU

- Energy Efficient: Provides up to 90 percent energy reduction for ML workloads, such as ASR, compared to previous Cortex-M generations.

- Network Support: Flexible design supports a variety of popular neural networks, including CNNs and RNNs, for audio processing, speech recognition, image classification, and object detection.

- Future-Proof Operator Coverage: Heavy compute operators run directly on the micro NPU, such as convolution, LSTM, RNN, pooling, activation functions, and primitive element wise functions. Other kernels run automatically on the tightly coupled Cortex-M using CMSIS-NN.

- Offline Optimization: Offline compilation and optimization of neural networks, performing operator, and layer fusion as well as layer reordering to increase performance and reduce system memory requirements by up to 90 percent. Delivers increased performance and lower power compared to non-optimized ordering.

- Integrated DMA: Weights and activations are fetched ahead of time using a DMA connected to system memory via AXI5 master interface.

- Element Wise Engine: Designed to optimize for commonly used elementwise operations such as add, mul and sub for commonly used scaling, LSTM, GRU operations. Enables future operators composed of these similar primitive operations.

- Mixed Precision: Supports Int-8 and Int-16: lower precision for classification and detection tasks; high-precision Int-16 for audio and limited HDR image enhancements.

- Lossless Compression: Advanced, lossless model compression reduces model size by up to 75 percent, increasing system inference performance and reducing power.

- System Integration: Works seamlessly with Cortex-M devices and Arm Corstone systems, and allows designers to configure and build high-performance, power-efficient SoCs while further differentiating with combinations of Arm processors and their own IP.