Back in December, Microsoft introduced a set of new A.I based abilities to Bing browser for better information in search results. Today, Microsoft is rolling out more refined enhancements and several more features.

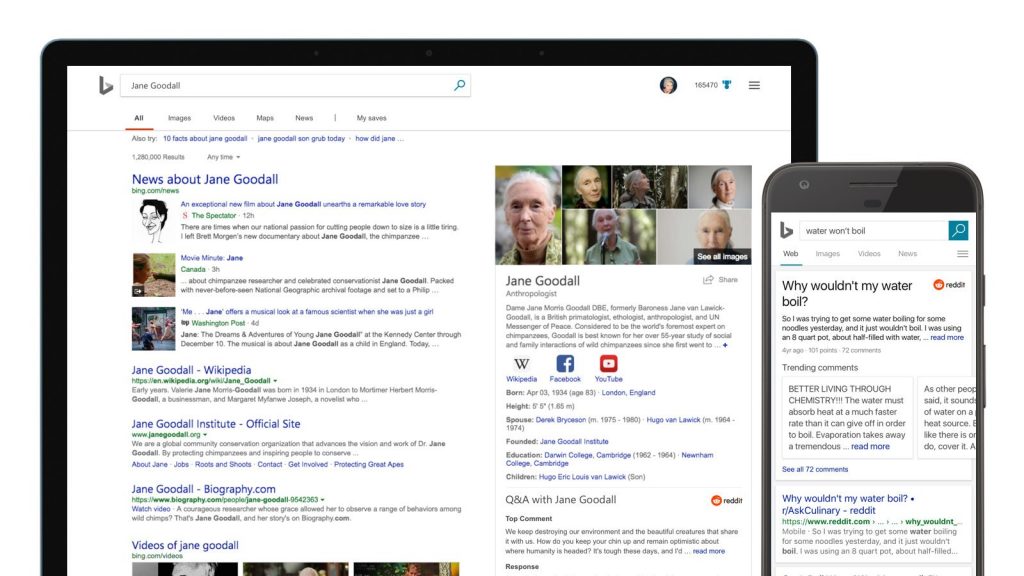

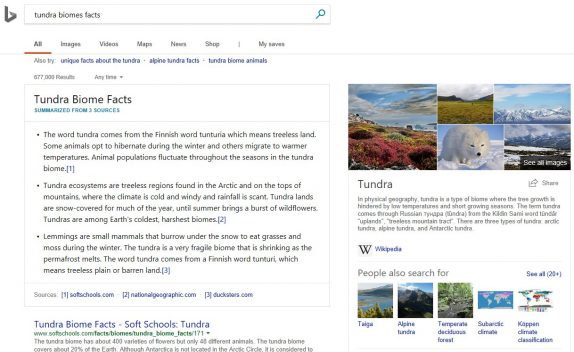

Bing will now aggregate more facts for given topics across several sites; you can save time by learning about a topic without having to check several sources yourself. To put it in simple, Bing will now club more information in a single place thus eliminating the need to search from several websites. However, Bing will also include a link to its source if you wish to know more details.

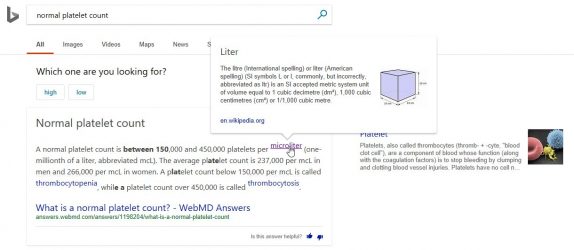

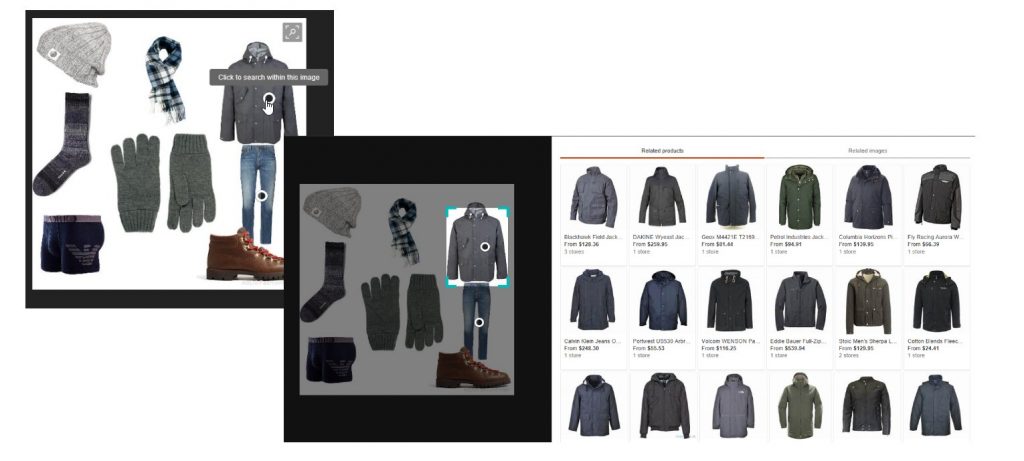

It will now provide multiple answers to how-to questions so you can pick whichever is best for you. In your searches, if you come across a word that isn’t common knowledge, Bing will now show you its definition when you hover over with the cursor. Back in December, Bing rolled out intelligent image search, which allows you to search within an image to find similar images and products. Now, this feature is expanded to work with more categories and not just limited to few fashion items.

Microsoft says that delivering intelligent search requires tasks like machine reading comprehension, requiring immense computational power. So the company is relying on the Intel for that. It is built on a Project Brainwave; deep learning acceleration platform, which runs deep neural networks on Intel Arria and Stratix Field Programmable Gate Arrays (FPGAs) on the order of milliseconds.

The Intel’s FPGA chips allow Bing to quickly read and analyze billions of documents across the entire web and provide the best answer to your question in less than a fraction of a second. The Intel’s FPGAs have helped in decreasing the latency by more than 10x by increasing the model size by 10x. All these new improvements and features will be rolling to Bing over the next few days.