In honor of Global Accessibility Awareness Day (May 15), Google announced updates to its accessibility tools on Android and Chrome, along with new support for developers working on speech recognition.

AI-Powered Accessibility on Android

Angana Ghosh, Director of Product Management for Android, shared that Google is combining its AI technologies, including Gemini, to improve core mobile features for users with vision and hearing challenges.

Enhanced Gemini and TalkBack Features

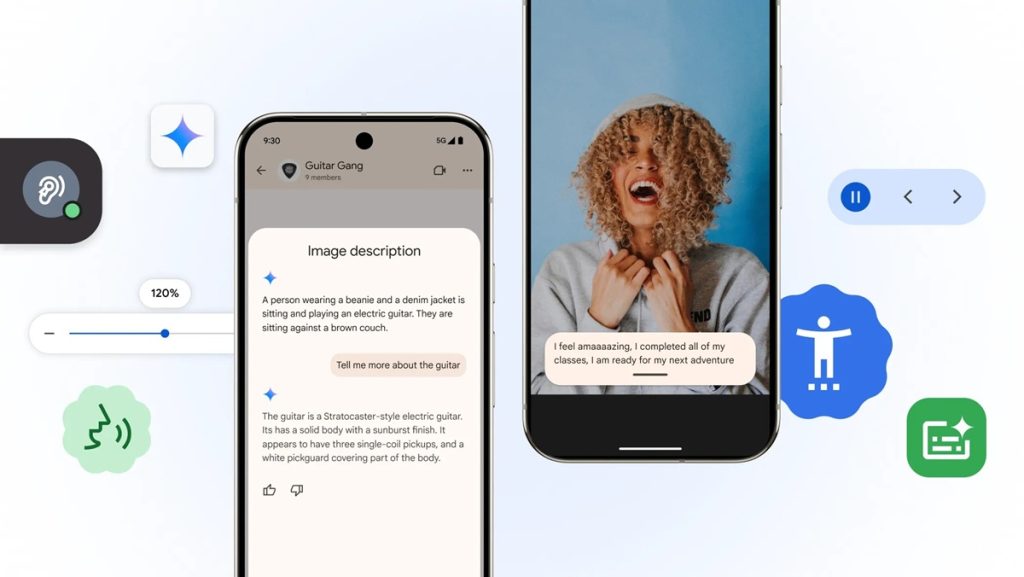

Gemini AI was introduced to TalkBack, Android’s screen reader, last year to help describe images when no alt text is available. Now, the feature lets users interact by asking questions about pictures or the content on their screens and receive informative answers.

For instance, if someone shares a photo of a guitar, users can inquire about its type, color, or other features. Likewise, while using shopping apps, users can ask about the fabric of an item or if there are any active discounts

Expressive Captions That Capture Speech Nuance

Expressive Captions generate live captions for sounds across many Android apps, reflecting not just what is said but how it is said. Ghosh explained the feature now recognizes stretched sounds, such as a prolonged “nooooo” or an enthusiastic “amaaazing shot.” It also identifies additional noises like whistling and throat clearing.

This feature is currently rolling out in English and will be accessible to users in the United States, United Kingdom, Canada, and Australia who have devices running Android 15 or newer.

Broadening Speech Recognition Access Worldwide

Google began Project Euphonia in 2019 with a focus on making speech recognition more accessible for individuals with atypical speech patterns, and is now extending those efforts to a global scale.

Developer Tools for Custom Speech Models

Google provides open-source resources on Project Euphonia’s GitHub page. Developers can use these to build personalized audio tools or create speech recognition models tailored to diverse speech patterns.

Support for African Languages

Earlier this year, Google.org partnered with University College London to launch the Centre for Digital Language Inclusion (CDLI). The center is developing open datasets for 10 African languages and building speech recognition technologies, while supporting local developers and organizations.

Accessibility Features for Students

Accessibility tools benefit students with disabilities. Chromebooks offer Face Control, allowing navigation through facial gestures, and Reading Mode for personalized text display. Students taking SAT and AP exams via the College Board’s Bluebook testing app can now use Google’s accessibility features like ChromeVox screen reader and Dictation alongside the app’s tools.

Making Chrome More Accessible

With over two billion daily users, Chrome continues to improve accessibility.

- Improved Interaction with PDFs: Chrome now applies Optical Character Recognition (OCR) to scanned PDF files, enabling text selection, searching, and screen reader support.

- Page Zoom on Android: Users can zoom in on text without affecting page layout. Zoom settings can be customized for all websites or specific ones through the browser menu.

Angana Ghosh noted,

Advances in AI continue to make our world more and more accessible. We’re building on our work and integrating the best of Google AI and Gemini into core mobile experiences customized for vision and hearing.