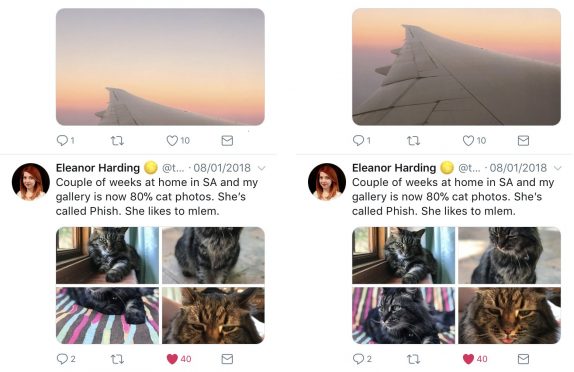

Twitter feed doesn’t show images in full on the news feed, and the company thinks that it can be challenging in rendering a consistent UI experience and the photos more often than not are framed awkwardly. Today Twitter is solving the problem by using neural networks for smart auto-cropping of Images.

The company previously used face detection to focus the view on the most prominent faces in the pictures which had its own limitations when presenting images. Now with neural networks, Twitter will focus on “salient” image regions. In general, people tend to pay more attention to faces, text, animals, but also other objects and regions of high contrast. This data can be used to train a neural network to identify what people might want to look at.

The basic idea behind this is to use these predictions of the neural network to center a crop around the most interesting region. However, doing a pixel-level saliency analysis of all the pictures uploaded to Twitter can be a lengthy process so to address the concern, engineers developed a smaller, faster neural network that can identify the gist of the image. Secondly, Twitter is said to have developed a pruning technique to iteratively remove feature maps of the neural network which were costly to compute but did not contribute much to the performance.

With the two said methods, the company was able to crop media 10x faster than the previous implementation allowing faster detection of saliency on all images as soon as they are uploaded and crop them in real-time. These updates are in the process of rolling out the Twitter desktop site, Android, and iOS.